If you need to sign in to scrape data in such instances, you should carefully review the terms you’ll be agreeing to. Be wary, however, because it may directly indicate that you’re not authorized to scrape any data. You agree to their web scraping practices if you log in or agree to the terms and conditions. Examine The Terms And Conditions For Logging In And Using The Website Remember that caching the pages can help avoid making unwanted requests. All you have to do is write it to a file for a one-time task or a database if you scrape repeatedly. You can achieve this by caching requests and responses from the Hypertext Transfer Protocol (HTTP). Knowing which pages the web scraper has visited previously can help speed up the process. It also boosts the overall pace of the data extraction process because the server responds faster.

Scraping during off-peak hours will prevent the website servers from overloading.

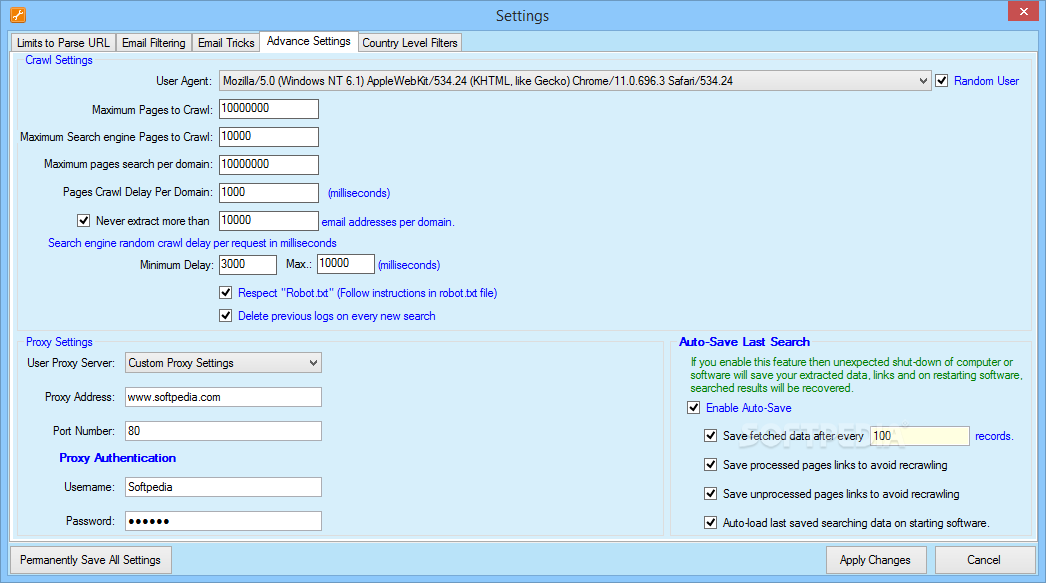

The location of where the majority of traffic originates can help you determine off-peak hours. Scheduling web crawling during off-peak hours is one way to ensure that the website you’re targeting won’t slow down. Scraping Should Be Done During Off-peak Hours As a result, the website will be able to unwind. Set the crawler to hit the target site at a fair frequency and try to cap the number of simultaneous queries if you don’t want to cause any disturbances to the website. It may result in an unpleasant experience for visitors when they’re browsing the website. Similar to human users, the presence of bots can be a burden to the website’s server. Avoid Flooding The Servers With Numerous RequestsĪn overloaded web server is vulnerable to downtime. You can use proxies to make sure nobody can see your real IP address or if you need to access or scrape restricted websites in your current location. If you’re running a business, you need a reliable proxy network. The ideal way to get around this limitation is to route your requests via a proxy network and rotate IPs frequently. The website will block the IP address once the request rate exceeds the threshold. Generally, websites have a set threshold on the number of requests they can get from a single IP address. Remember that websites will record every activity you’re doing on the website. If your request hits the server of a target website, it’ll be noted in a log. Make sure you’ll carefully follow the rules while extracting data from the website. If you continue to extract from websites that don’t allow crawling, you may face legal consequences and should avoid doing so.Īside from blocking access, each website has set rules on proper behavior on the site in robots.txt. Some websites are strict since they block crawler access in their robots.txt file. Generally, there are website rules on how the bots should interact with the site in the robots.txt file. If you plan to use web scraping, the first thing you need to do is check the robots.txt file of the website you’ll be extracting data from. Take Into Consideration The Rules In The Robots.Txt

0 kommentar(er)

0 kommentar(er)